今年的 COMPUTEX 非常精彩!特別是 AI 教父 NVIDIA CEO Jensen 除了在6/2於台北市的台大體育館內進行現場演講(有線上轉播)(要看重點請點我看文章)之外,更在 6月4日進行NVIDIA 創辦人暨執行長黃仁勳全球媒體問答活動!而我有榮幸獲邀參與這場難得的盛會,並且感受到 Jensen 的黃仁勳式幽默,對於各國媒體記者發問都非常的友善回覆,甚至還會反過來問好與熱情「調侃」記者。黃仁勳進入會場時還發生一段好笑的事情,那就是有瘋狂媒體記者高聲呼喊:Jensen I love You!而黃仁勳也幽默馬上回應:I love you too!

由於這場活動只開放攝影不能錄影,我只能分享一些精彩的照片給大家。以下是活動方收錄的 QA部分內容,我穿插一些照片進去分享給大家!!總之,黃仁勳帶來的這波 AI 商機大家千萬部要忽視,試圖找尋自己的機會點,就有機會發大財!

以下是精彩的 QA 內容:

Mylene Mangalindan: Hi everyone. I’m Mylene Mangalindan with corporate

communications. If you could, when we get to questions, please introduce yourself and say what outlet you’re with. That would be really helpful to Jensen. Thank you.

Jensen Huang: Okay, I haven’t had breakfast, so breakfast and lunch. Turkey. Turkey

sandwich without mayonnaise.

Mylene Mangalindan: All right, we’ve got a gentleman here who’s raising his hand. Can you just wait for the mic? Runners, please?

Hyoungtae Jang (Chosun Daily, South Korea): It’s an honor to have the opportunity to ask

you a question. My name is Hyoungtae Jang from Chosun Daily in South Korea. I would like to ask you about HBM. Currently, SK Hynix is NVIDIA’s HBM partner. When will Samsung become an HBM partner? According to rumors, does Samsung’s HBM really fall short of the standards set by NVIDIA?

Jensen Huang: Does anybody have a napkin? Never mind. Your question. HBM memory is

very important to us because we need very high-speed memories and very low-power

memories. And… I’m not thinking. I just got a piece of turkey in my mouth. Thank you. The

question is not too hard. I’m just… I don’t want to, you know…

This chair is kind of like one of those merry-go-rounds where it just kind of keeps moving.

If I just sit still like this, pretty soon, I’m going to be facing this way. I think it’s because the Earth is rotating. It’s kind of like your toilet; you know it always goes one way. It’s not

possible to stop this thing. And I can’t reach the ground. I’m stuck. Can somebody just give me one of those chairs? One of those fancy chairs that you guys have? Careful, careful. I really want to eat this, but it has no mayonnaise at all. Yeah. I’ve only been allowed to eat six Tic-Tacs today between meetings. CEOs are not fed very well. Six Tic-Tacs.

Okay. What wasyour question? I’m just kidding. You’re from Korea.

You would like to ask about Samsung memory? HBM memory is very important to us. As

you know, we’re growing very, very fast. We have Hopper H100, H200. We have Blackwell

B100, B200. We have Grace Blackwell GB200. The amount of HBM memories that we are

ramping is really quite significant. The speed that we need is also very significant. And we

work with three partners. All three of them are excellent. SK Hynix, of course, Micron, and

Samsung. All three of them will be providing us HBM memories. And we’re trying to get

them qualified and get them adopted into our manufacturing as quickly as possible.

Nothing more than that. Excellent. Excellent memory vendors, excellent memory partners.

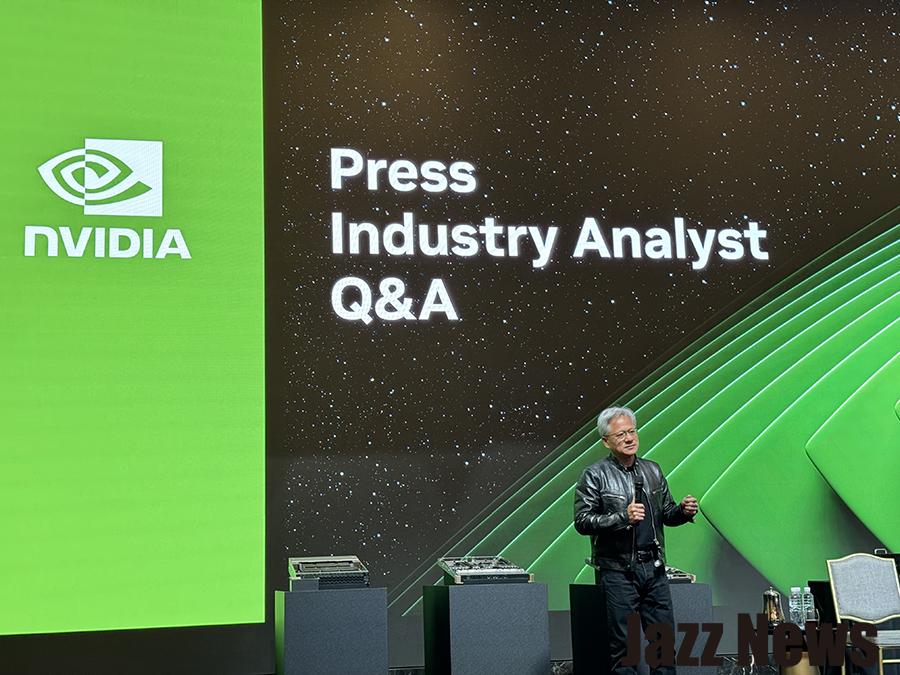

黃仁勳兩度喝光一杯飯店提供的美式咖啡!

Tom’s Hardware: About two years ago, I asked you if you would be interested in making a

chip in Intel’s fabs. And you said yes. Then last year, you told me that you guys had had a

test chip and that the process node looked good. So it’s been another year. So I’m curious: Has there been any more progress in that? Is there anything that you could tell us?

Jensen Huang: My interest remains. Good question.

Elaine (Commonwealth Magazine): Hi Jensen. Elaine from Commonwealth Magazine. Nice

to see you here. On many occasions, in many countries. So you also

built a supercomputer in Taipei? One. Would you consider building a second one in Taiwan, and where? Thank you.

Jensen Huang: Yes. I don’t know. You guys are asking me questions that prompt extremely short answers. If you ask that question to ChatGPT, you will get, “Yes. I don’t know.”

Press Member (Japan): Jensen. I’m from Japan, and here is my question. AI is being used

more and more widely in games. It started with DLSS, and now AIs can power NPCs to talk us as live human beings, which is pretty amazing. But do you think it’s possible to get even further, like applying multi-modality to games, even using AI to generate game graphics directly instead of the traditional rasterizing rendering pipeline? And we also know that NVIDIA is speeding up the pace of product upgrades and further segmenting your product lines. How will your roadmap affect NVIDIA supply chain as well as the entire industry?

Jensen Huang: I do have to caution you guys that I was up at 5:00, and I’ve been running

nonstop until now, and I just had three pieces of very dry turkey. My brain function is

running at about 25%, so if you ask compounded questions and multiple compounded

questions, it is not likely I’m going to remember the questions. The first one has to do with

AI for gaming. You are going to see that we, well, we already use AI for neural graphics so

that we can infer or generate pixels that we don’t render or we render very few pixels and

we generate a whole bunch of others. As a result, we can achieve very high-quality ray

tracing path tracing 100% of the time and still achieve excellent frame rates. We also

generate frames between frames, not interpolation but frame generation. And so not only

do we generate pixels, we also generate frames.

In the future, we’ll even generate textures and generate objects so that the objects will be

lower resolution and we can generate higher resolution textures. Textures can be lower

quality; we can generate higher quality. So we can even compress the games. And so we’re going to get richer and richer games in the future. And inside the games, all the characters will be AI, right? It’s like having, if you go into a battle with six of your colleagues and you know all those six colleagues, maybe two of them are real people, the other four are AIs and they’ve been playing with you for a long time so they actually remember you. And so you’re going to have AIs that you’re playing along with, you have AIs that you’re adversarial with.

And so the games will be generated with AI. They will have AIs inside and you’ll even have

the PC become AI. So the PC could help you play. Your PC will become an excellent

assistant. We call it G-assist.

So, the PC becomes an AI. We’re going to see AI infused into all aspects of gaming and

PCs. The second question that you had has something to do with the supply chain. How did you go from games to supply chain? That was a giant leap. The answer is we’re going to see, of course, great new games. It’s going to continue to drive our GeForce business. Our GeForce business is super vibrant. It’s doing great, and it is the largest gaming platform in the world today. We have excellent partners. And I expect the GeForce is going to continue to grow. And now, with RTX, the software suite, and the tools that we’ve created so that any GeForce customer can enjoy, either create with AI, create their own AI agents, this is going to be a really fantastic growth time for GeForce again.

黃仁勳搞笑拿起產品做運動!

Katsuki (Japan): Jensen, on the right over here, on the right. Neither. Hello, my name is

Katsuki from Japan. So nice to see you.

Jensen Huang: Yeah nice to see you again.

Katsuki (Japan): So I have a question about building technology. Last week, Intel and AMD also announced UA Link Consortium. They claim your NVIDIA is proprietary. So open is much better. So what do you think about that? I think in this industry history we use

proprietary technology. Intel is x86. ARM is ARM architecture. And now, NVIDIA is NVLink.

So what do you think of open versus proprietary?

Jensen Huang: Performance and cost performance are good for end users. And if you have prprietary technology but good performance and cost performance, end users accept that.

Reporter: So what do you think about open and proprietary?

Jensen Huang: Proprietary and open standards, it’s always been the case, right? The

industry has been proprietary and open. Intel is x86, AMD is x86, ARM is ARM architecture, and NVIDIA is NVLink. I think that the best way to think about it is the openness of a platform, the ability to innovate on top of it, and the value it creates for the ecosystem.

Whether it’s proprietary or open, the most important thing is whether it creates value for

the ecosystem, whether it drives innovation, and whether it creates opportunities for

everybody. And I think NVIDIA has done that. NVLink is incredible technology, but so is our

collaboration with the industry. We work with PCI Express and we have our NVSwitch and

Quantum-2, our networking products.

We have a lot of open technology that we work on with the industry, and at the same time, we also innovate and create proprietary technology. It’s really not one or the other. It is about creating value for the ecosystem and driving innovation forward.

Mylene Mangalindan: Okay, we’ve got another question here.

Reporter: Jensen, one more question. Can you give us an update on your partnership with

Arm?

Jensen Huang: Sure. Our partnership with Arm is fantastic. As you know, we’re working on Grace, our CPU designed specifically for AI and high-performance computing. Grace is built on the Arm architecture, and we’re really excited about it. It’s going to be fantastic for ourdata centers, and it’s going to be an incredible product for the industry. Our relationship with Arm is very strong. We’re working closely together to bring new technologies to market, and we’re very excited about the future of Arm-based CPUs in our product lineup.

Press Member: Jensen, can you tell us more about your views on the future of AI and how

NVIDIA is positioning itself in this space?

Jensen Huang: AI is the most powerful technology force of our time. It will revolutionize

every industry, and NVIDIA is at the forefront of this transformation. We are providing the

tools, platforms, and systems to drive AI innovation. From GPUs to software libraries like

CUDA, TensorRT, and our AI platforms like Clara for healthcare, Isaac for robotics, and Drive for autonomous vehicles, we are enabling the next wave of AI advancements.

Our work with partners across industries ensures that AI can be deployed effectively and

that we continue to push the boundaries of what is possible. AI is going to touch every

aspect of our lives, and NVIDIA is going to play a crucial role in this journey.

Patrick Murray from PC World: My colleague Gordon Wong couldn’t be here, so he said to

say hi. I will do that. I was asking if you could give gamers a taste of what the 50 series

brings.

Jensen Huang: 50 series is going to be something I will tell you about later. You do this ten times a day. You’re going to be in good shape. I can’t wait to tell you about the next

generation.

Howard Huang from CTS News: You have emphasized data center for several times and

but many countries, including here in Taiwan, are worried about electricity and energy issue and actually our minister of economy, he also said actually, Taiwan will not run out of electricity. But when I boost, maybe the situation will be different. So my question is, can you give us some suggestion? How can we make our policy in this regard to tackle down the energy and also the electricity problem, to make the whole environment more

sustainable, sustainability, sustainable and also we can maintain and satisfy our computer

in need. Yeah. Thank you.

Jensen Huang: Three. three answers in one. I use accelerated computing, accelerated

computing should be the way that people use computing today. Do not use general

purpose computing, only accelerate every application you can because it saves powers. The more you buy, the more you save, it is true. It is absolutely true.

One example after another example, you save money, you save time and you save energy.

Number one accelerate everything. Number two, generative AI is not about training. It’s

about inference. The goal is not to train. The goal is to inference. When you inference the

amount of energy used versus the alternative way of doing computing is much, much lower.

For example, I showed you climate simulation weather simulation in Taiwan, right? 3000

times less power 3000 times, not 30%. 3000 times. This happens in one application after

another application.

So two, you have to think about AI, not just in training but longitudinally. Look over time.

The goal is not to train only, but to inference. The inference energy savings is enormous.

Number three, this is my favorite part. AI doesn’t care where it goes to school. The world

doesn’t have enough power near population, but the world has a lot of excess energy. You

know that, right? The reason why you know is because just the amount of energy coming

from the sun is incredible, but it’s in the wrong place. It’s in the places where people don’t

want to live. We should set up power plants and data centers for training, where we don’t

have population. You don’t need to use the power grid for that. Train the model somewhere else. Move the model for inference closer to the people in their pocket, on phones, in their PCs, in their edge data centers, in cloud data centers. And so three different ideas accelerate everything. Don’t think just about training, but think about inference. And number three, AI doesn’t care where it goes to school.

再來是台灣資深記者 Monica 發問,黃仁勳一開始搞笑互動~

Monica, DigiTimes: Long time, since this, I have a question.

Jensen: Monica has been with DigiTimes for how many years now?

Monica: 22 years.

Jensen: That’s right. I was going to say May I know because I know that Monica from

DigiTimes has very tough questions. And so I’m trying to distract her. How are your kids?

Monica, Reporter: It’s very good. Good good good.

Jensen: May I yes. Now will you ask an easy question?

Monica, Reporter: since CSP companies like Google and Microsoft are developing their own AI chips, we would like to impact NVIDIA. The second question is, will NVIDIA consider doing business? Just would we consider doing. Oh, ASIC ASIC business. Yes. Am I done?

Jensen: Okay, so, NVIDIA is very different. As you know, NVIDIA is not accelerator. NVIDIA is accelerated computing. Do you understand the difference? I explain it every single year

over and over again, but nobody understands. Accelerator for Deep Learning cannot do

SQL ata processing. We can. Accelerator for deep learning cannot be used for image

processing.

We can accelerator cannot be used for fluid dynamic simulation. We can’t make sense

.NVIDIA is accelerated computing. It’s quite versatile. It is also extremely good at deep

learning. Makes sense, right?

So NVIDIA’s accelerated computing is more versatile. Therefore the utilization is higher

when the utilization is higher, usefulness higher. The effective cost is low.

So let me give you an example. People think that your smartphone is very expensive

compared to the phone. A long time ago. Phone a long time ago, $100 a smartphone was

more expensive, but the smartphone replaced the music player. It replaced the camera. It

replaced your laptop. Sometimes. Isn’t that right? So the versatility of the smartphone

made it very cost-effective. In fact, it’s the most valuable instrument that we have.

Same thing with NVIDIA accelerated computing number one. Number two, Nvidia’s

architecture is so broad and so useful. It’s used in every single cloud. It isn’t AWS, it’s in

GCP, it’s in OCI, it’s in Azure. It’s in regional clouds. It’s in sovereign clouds. It’s everywhere private clouds, everywhere on-prem, everywhere. And because our reach is so high, we are the first target for any developer. Makes sense. Because if you target, if you program for Cuda, it runs everywhere. If you program for one of the accelerators, it only runs there. So that’s the second reason because of the second reason our value to the customer is very high because not only do we accelerate the workload for the CSPs, NVIDIA brings them customers because they’re Cuda customers.

So we bring customers to the cloud when customer, when cloud vendors increase their

capacity of NVIDIA, the revenue goes up. When they increase capacity of their own ASIC,

their cost goes up. The revenue may not go up. So we bring customers to our clouds, and

we’re very pleased about that. So NVIDIA is positioned very different. One, we’re versatile

because we have a lot of great software. Two, we’re in every cloud, everywhere. So we are a great target for developers, and three, we are very valuable to CSPs. We bring them

customers.

最後最後,黃仁勳要求最後一個問題要問的很有..意義!而台灣 TVBS 記者 Isabel 提問:

Isabel, TVBS World Taiwan: I’d love to ask the last question.

Jensen Huang: Okay, bring it on.

Isabel, TVBS World Taiwan: Well, my name is Isabel. I’m from TVBS World Taiwan.

Jensen: Ladies and gentlemen, this is Isabel. She’s going to ask the last happy question.

Isabel: Geopolitical.

Jensen: Oh, my goodness. Isabel is not well as the last question before we all go off, she

would like to ask about geopolitical. That’s fine. Bring it. Yeah.

Isabel: So actually earlier you talk about the major risks for you right now would be AI. The complicated AI and the technology.

Jensen: Yeah. It’s very complicated. So we’ve been built before. Yes I know.

Isabel: And then because you have a lot of announcement and then significant investment

further moving forward in Taiwan, aren’t you worried about the geopolitical risk here by

having those investments and…

Jensen: I’m sorry, Isabel, I’m sorry.

Isabel: Well, I mean, what are the major criteria that you’ve been looking for, the cities to

build your data center or the headquarters?

Jensen: Major criteria, major criteria, a nice piece of land. Other than that. Well, well, a nice piece of land, if you. If you can find me a nice piece of land that I can build a large

headquarters here in, in Taipei, you know, I would. Yeah. This is pretty close. This is because we already have hundreds of people here. You know, we have, I think, some 500 people here already. And so this will be a nice, convenient place.

But your question is, how does it look?

Listen, we build in Taiwan because TSMC is so good. It’s not normal.

You know, it’s not like hey I can buy from TSMC, I can buy from 7-Eleven, I can buy, you

know, TSMC has incredible advanced technology, incredible work ethic, super flexible. Our

two companies have been working together for a quarter of a century longer.

So we know each other’s rhythm. It’s almost like we don’t have to say anything, just like,

you know, we work with our friends. You don’t have to say anything. We just understand

each other.

And so we can build very complicated things at very high volume, at very high speed. With

TSMC, this is not normal. You can’t just randomly, you know, somebody else just go do it.

The ecosystem here is incredible. TSMC, upstream of TSMC, downstream of TSMC. The

ecosystem is really rich. Amazing companies doing amazing things.

And we’ve been working with them for a quarter of a century.

And then as soon as you’re done with building the chip, the technology is incredible. The

technology is incredible. The packaging technology is incredible. SPIL is incredible.

Then when you put all this stuff together, you have to test it. The testing houses are

incredible. Then you’ve got to assemble into systems. They, the system makers, are

incredible.

The logistics are incredible. If we could build this somewhere else, we would think about it.

But quite frankly, very difficult.

And so yes, there are many considerations in life, but the most important consideration is

possibility. I need to be able to make it at all.

And so TSMC, the ecosystem around here, Foxconn, Quanta, Wistron, Wiwynn. You know,

Inventec, Pegatron, there’s so many. Asus, MSI, Gigabyte – I mean these are amazing

companies, underappreciated, unsung heroes. Really, truly true. And so I think if you’re from Taiwan, you should be very proud of the ecosystem here.

If you’re a Taiwanese company, you should be very proud of your achievements. This is quite an extraordinary, extraordinary place. And I’m very proud of all of my partners here. I’m very grateful for all that they’ve done for us and all the support that they’ve provided us over the years.

And I’m very excited that this is a new start, built upon all of the expertise that these

companies have created over the years.

All these amazing companies have developed amazing expertise over the last two and a

half decades, three decades. And now this new era is coming, and it’s even bigger than the last ones, bigger than all of the previous ones combined.

And so I’m very happy for the prosperity, and the opportunity and the exciting future ahead

of all of you. Thank you very much.

活動最後我快速衝到黃仁勳旁,喜獲親筆簽名!

- 搭載徠卡相機的SHARP AQUOS R9手機預購活動開跑 - 2024 年 7 月 27 日

- Xiaomi 手環 9升級霧面金屬登台!新增雙燈檢測系統準確再提升 - 2024 年 7 月 26 日

- 小米首款小摺疊手機Xiaomi MIX Flip 售價免三萬! - 2024 年 7 月 26 日